|

Documentation for 3.17

Testing a GUI with TextTest and a Use Case Recorder

This is a step-by-step guide to testing a GUI with texttest.

It assumes you have read and followed the instructions in the

installation guide. There is a fair amount of overlap with the document for getting started testing a non-GUI program, so it can be

helpful to read that first. We will use a simple PyGTK GUI as an

example. This is also Exercise 4 in the course material so if you want to follow this it's

suggested you download it from here.

Unzip it and then set the environment variable TEXTTEST_HOME to point at its "tests" directory. Much

of what is said here should apply to any use case recorder,

though. Text in italics is background information only. Naturally you need to install PyUseCase 3.x

or newer from SourceForge also

before this will work.

For Java GUIs, look at JUseCase. If you are using Microsoft's .net, use NUseCase.

If you use another GUI toolkit – write your own use case recorder and tell me about it!

First, create an initial application by running "texttest.py --new" as described in

the guide for testing “hello world”. The main difference is that you should select "PyUseCase 3.x" from the GUI testing options! The application creation dialog should look something like this just before you press "OK":

The GUI-testing options drop-down list tries to cover all the bases, and will set the "use_case_record_mode" and "use_case_recorder" config file settings in your config file as necessary. If you choose "Other embedded use-case recorder", TextTest will set the environment variables USECASE_RECORD_SCRIPT and USECASE_REPLAY_SCRIPT to the relevant locations, which are

the variables PyUseCase reads from when deciding the relevant files to read and write.

Other GUI simulation tools can of course easily be wrapped by a script that would read

the above variables and translate them into the format the simulation tool

expects.

First, we create an “empty test” as for “hello

world”. However, command line options are

not sufficient to define a GUI test. We also need to define the

use case that will be performed with the GUI. To do this, we go

to the “Running” tab and then the “Recording”

subtab, and then click the “Record Use-Case” button

(or we just choose it from the Actions menu or press "F9" and don't bother with the tabs,

as we aren't changing any settings). This will start an instance of the dynamic GUI, as with running tests.

This in turn fires up our PyGTK bug system GUI with PyUseCase in record mode. We simply

perform the actions that constitute the use case (in this case select some rows, click some of

the toggle buttons at the bottom and sort some columns), and then close

this GUI.

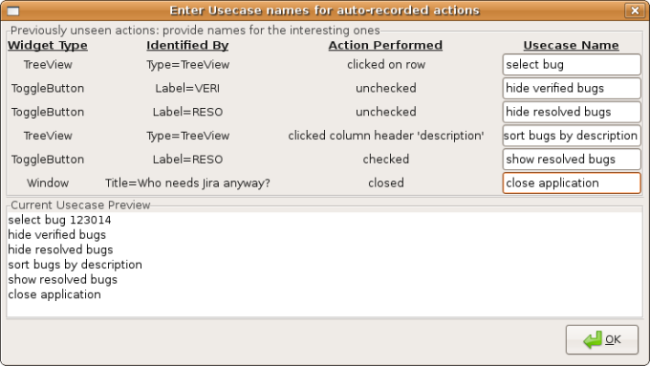

When we close the GUI, PyUseCase informs us that we have performed some actions it does not yet have names for. So it produces a dialog for us to fill in with appropriate names. We try to decipher which rows correspond to which things we did based on the widget types, identifiers and descriptions of the actions performed that it gives us. This information then goes into our "UI map file", while the names themselves form the "usecase" on which the test is based, which you can see previewed in the lower window as you type. For example, we might choose some names something like this.

(If you leave any item blank, PyUseCase concludes that you are not interested in this event and will not record it in the future)

The dynamic GUI will then turn the

test red and highlight that it has collected new files for us.

By clicking this red-line in the test view we get the view

below. The contents of the new files can be seen in textual

format in the “Text Info” window by single-clicking them (as shown),

or viewed by double-clicking them if they are too large. The "usecase.bugs" file provides

a description of what we did, while the "output.bugs" file provides PyUseCase's

auto-generated description of what the GUI looked like while we were doing it.

If we made a mistake recording, we should simply quit the

dynamic GUI at this point and repeat the procedure (the names we entered for PyUseCase

will still be kept). If we are happy with the recording we should save the results: press the

save button with the test selected.

Unless we disabled this from the

“Record” dialog at the start, TextTest will then

automatically restart it in replay mode, in order to collect the

output files. This operation is done invisibly as it is assumed

to always work – but (for example) PyUseCase produces

different text when replaying than when recording, and it is

that text we are interested in. It can be configured to also

happen via the dynamic GUI – this is useful if you don't

trust your recorder to replay what it has just recorded!

Messages in the status bar at the very bottom of the static GUI

keep you posted as to progress, anyway. When all is done, it

should look like this:

Note that the "definition file" is

now the usecase file recorded by PyUseCase. Naturally there

could be command line options as well if desired. This is much

the same as the final result of the “hello world”

set up.

If we visit the "Config" tab to the right we will now found both

a TextTest config file and a PyUseCase config file "ui_map.conf",

under "pyusecase_files". If you fire this up by double-clicking it,

you can see that it essentially contains the information you entered

before. When the GUI changes we will need to edit this file, but hopefully

we can minimise changes in the tests themselves.

PyUseCase identifies widgets by name, title, label and type, in that order. Obviously here we are identfiying the main tree view by its type alone, which will break down as soon as the UI contains another one. Likewise, the title of our window might vary from run to run. For robustness it's therefore often necessary to assign widget names in your code, at which point this file should be updated with "Name=Bug tree view" instead of "Type=TreeView".

Clearly, there is no need to run it once in order to collect

the output as we did with “hello world”: this is

built into the “Record use-case” operation.

Currently, if we run the test, the GUI will pop up and the

actions will be performed as fast as possible.

On UNIX, TextTest will try to stop

the SUT's GUIs from popping up, by using the virtual display server Xvfb

(a standard UNIX tool). For each run of the tests it will start such

a server, point the SUT's DISPLAY variable at it and close the server

at the end of the test. Ordinarily, it will run it like this

Xvfb -ac -audit 2 :<display number>

but you can supply additional arguments via the setting "virtual_display_extra_args"

which will appended before the display number.

It will use its own process ID (modulo 32768) as the display number to guarantee

uniqueness. After starting Xvfb, it will wait the number of seconds

specified by the environment variable "TEXTTEST_XVFB_WAIT" (30 by default)

for Xvfb to report that it's ready to receive connections. If that doesn't happen,

for example because the display is in use, it will kill it and try again with

another display number.

If you only have Xvfb installed remotely, you can specify machines

via the config entry “virtual_display_machine”. This will

cause TextTest to start the Xvfb process on that machine if it isn't

possible to do so locally.

On Windows, target GUIs are started with

the Windows flag to hide them, so a similar effect occurs. However, note

that this operation is not recursive, so any dialogs, extra windows etc.

that get started will (unfortunately) appear anyway.

We might want to examine the behaviour of the GUI for a test.

To do this, we set a default speed in the config file, using the

config file setting 'slow_motion_replay_speed:<delay>',

where <delay> is the number of seconds we want it to pause

between each GUI action. (This is translated to the environment variable

USECASE_REPLAY_DELAY which is forwarded to the system under test, or

the "delay" property if JUseCase is being used). For a particular run, we then select

“slow motion replay mode”, from the “how to

run” tab in the static GUI. This will force the GUI to pop

up for this run, whatever is set in the virtual_display_machine

entry.

In summary, our config file should look something like this:

|