|

Documentation for 3.16

Running tests in parallel on a grid

The “queuesystem”

configuration

When you have more than one machine at your disposal for

testing purposes, it is very beneficial to be able to utilise

all of them from the same test run. “Grid Engine”

software allows you to do this, so that tests can run in

parallel across a network. This greatly speeds up testing,

naturally, and means far more tests (or longer tests) can be run

with somebody waiting on the results.

TextTest's queuesystem configuration is enabled by setting

the config file entry “config_module” to

“queuesystem”. It operates against an abstract grid

engine, which is basically a Python API. Two implementations of

this are provided, for the free open source grid engine SGE

(which sadly doesn't work on Windows) and Platform

Computing's LSF

(which does, but costs money). You choose between these by

setting the config file entry “queue_system_module”

to “SGE” or “LSF”: it defaults to “SGE”. By default, it will submit all tests to the grid engine. It

is still possible to run tests locally as with the default

configuration, from the command line you can provide "-l".

From the Static GUI there are 3 options for the "Use grid" option. "Always" and "Never" speak for themselves.

The default is however to have some threshold based on the number of tests to submit: if more tests are used,

the grid will be used, otherwise they will be run locally. This is configured via the config file setting

"queue_system_min_test_count", which defaults to 0 and hence the same as the "Always" option.

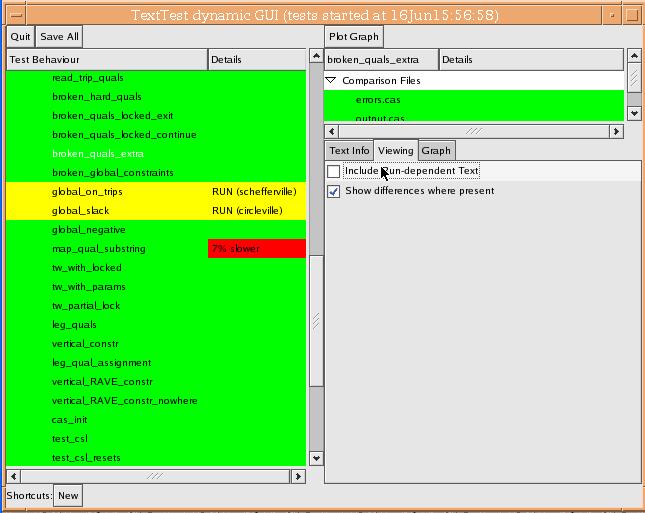

As soon as each test finishes, the test will go green or red,

and results will be presented. Unlike the default configuration,

the tests will not naturally finish in order. Here is a sample

screenshot, from a very old version of TextTest using SGE:

Some

tests have finished and gone green, but others are still running

and hence yellow. TextTest reports their state in the grid

engine (“RUN”) followed by the machine each is

running on in brackets.

Internally, TextTest submits itself to the grid engine and runs a slave process

remotely, which runs the test in question and communicates the

result back to the master process via a socket. It does not ordinarily do

any polling of the grid engine to see what is happening. This means that

if the slave process crashes remotely without reporting in (for example

because of hardware trouble, or because its files aren't mounted on the remote

machine) the test will still be regarded as pending and no error message will be shown.

If there is no active grid engine job and TextTest is still showing "PEND", it's

a good idea to kill the test, which will then poll the grid engine and search for

error log files from the job concerned, and can probably establish what happened to it.

As this functionality works with a different configuration

module, additional config file entries, running options and

support scripts are available, over and above those provided by

default:

A grid engine will be configured to have a number of queues,

which will hold jobs (tests in our case) until a CPU becomes

available somewhere, and then dispatch them to that machine.

These queues also handle job priorities, it is generally

possible to set up several queues so that jobs from one will

cause jobs from the other to be suspended. In the case of

testing, it is often useful to prioritise jobs by how long they

are expected to take, so that a one-hour test can be suspended

to allow a five-second test to run. To find out more, read the

documentation of the grid engine of your choice.

As far as TextTest is concerned,

it must decide which queue to submit each test to. The procedure

is as follows:

- If the option “Request

SGE/LSF queue” has been filled in (-q on the command

line), that queue will be used.

- If the config file setting

“default_queue” has been set to something other

than its default value (“texttest_default”), that

queue will be used.

- If neither, the default queue

of the queue system will be used, supposing it has one.

It is often useful to write a

derived configuration to modify this logic, for example to

introduce some mechanism to select queues based on expected time

taken. Resources are used to specify properties of machines

where you wish your job to run. For example, you might want to

request a machine running a particular flavour of linux, or you

might want a machine with at least 2GB of memory. Such requests

are implemented by resources, in both grid engines. Naturally,

more details can again be had from the documentation of the

queue systems. LSF requests resources via “bsub -R”

on the command line, while SGE uses “qsub -l”.

TextTest must choose which

resources to request on the command line. The procedure here is

to request all of the resources as specified below:

- The value of the option

“Request SGE/LSF resource”, if it has been filled

in (-R on the command line)

- The value of the environment

variable QUEUE_SYSTEM_RESOURCE, if it has been set in an

environment file.

- All resources as generated by

the performance_test_resource functionality, if enabled (see

below).

When tests complete, TextTest will keep the remote test process

alive and try to reuse it for a test with compatible resource requirements. This bypasses

the time needed to submit tests to the queue system and wait for them to be scheduled,

and reduces network traffic. This improvement in TextTest 3.10 should be able to

improve throughput considerably, particularly where a large number of short tests need to

be run.

By default, until all tests have been dispatched, TextTest

will reuse remote jobs in this way, but will also continually submit new jobs at the same time.

You can probably improve the throughput further by telling it the maximum number of parallel

processes it can reasonably expect from your grid. To do this, set the config file entry

“queue_system_max_capacity” to this number. It's generally better for this number to be

a bit too high than too low so be conservative! Once it has submitted this number of jobs it

will then stop submitting and rely on reusing existing jobs.

The dynamic GUI has a number of ways to view the files produced by the tests. With the tests being run remotely via the grid, it can become

an interesting question whether the viewing programs should be started on the remote machine or the local machine.

On the one hand interesting files may be written to paths that are only accessible from the remote machine where

the test ran, but on the other hand viewing programs may not be installed on all nodes in the grid.

When following files produced by remotely run tests, TextTest will always run the "follow_program" ("tail" on UNIX or "baretail" on Windows) by default on the remote machine, as this is supposed to respond quickly to changes and it is therefore better if it doesn't have to wait for the file server

before responding. When viewing files normally, it will by default run the viewer on the local machine. This can however be

configured, for example for files which can only be viewed remotely, using the config file setting "view_file_on_remote_machine",

which can be keyed on file types in a similar way to "view_program" which it configures.

As the queuesystem configuration is often used for very

large test suites, from TextTest 3.10 it will start to try and clean up temporary

files before the GUI is closed. Otherwise closing the GUI can appear to take a very

long time.

The default behaviour is now therefore to remove all test data and files belonging to

successful tests remotely, i.e. as soon as they complete. This can be overridden by

providing the "-keepslave" option on the command line, or the equivalent

switch from the Running/Advanced tab in the static GUI, in case you want to examine

the filtering of a succesful test for example.

The queuesystem configuration also provides some improvements

in default configuration's

functionality for comparing system resource usage in tests.

This is essentially in the area of the concept of a performance

test machine. In the default configuration, tests are run

locally, so all we can do is see if our current machine is

enabled for performance testing. With a grid engine at our

disposal, we can actually request a performance machine for

particular tests. The simplest way to do this is to check the “run on

performance machines only” box from the static GUI

(“-perf” on the command line). That will make sure

the grid engine requests that the test only run on such

machines.

It is also possible to say (for CPU time testing only) that

once tests take a certain amount of time they should always be

run on performance machines only (it is assumed that the

performance of the longest tests is generally the most

interesting.) This can be done via the setting

min_time_for_performance_force.

There is also an additional mechanism for specifying the

performance machines, which on SGE has to be used instead. The

config file setting 'performance_test_resource' allows you to

identify your performance machines via a grid engine resource,

for example to say “test performance on all Opteron250

machines”. This is generally easier than writing out a

long list of machines, and is compulsory with SGE for more than

one machine. With LSF, you can write out the machines as for the

default configuration if you want to.

The queuesystem configuration also provides an improvement to

the batch mode functionality for

unattended runs. This basically involves adding a horizon when

all remaining tests are killed off and reported as unfinished.

This will be done if it receives the signal SIGUSR2 on UNIX. Both grid engines can be set up to send this signal at a

particular time themselves, which involves submitting the

TextTest batch run itself to the grid engine. In LSF, use “bsub

-t 8:00” to send SIGUSR2 to the job at 8am the next

morning. In SGE, use “qsub -notify”, and then call

“qdel” for the job at the allotted time: this will

also cause the signal to be sent.

Both LSF and SGE have mechanisms to send signals to jobs when

they exceed a certain time limit. It is possible to configure

the queues such that they send SIGXCPU if more than a certain

amount of CPU time has been consumed, or SIGUSR1/SIGUSR2 if too

much wallclock time is consumed.

TextTest will assume this meaning for these signals and

report accordingly. SIGXCPU is always assumed to mean a CPU

limit has been reached. SIGUSR2 is interpret to mean a kill

notification in SGE and a maximum wallclock time in LSF, while

SIGUSR1 is used the other way round in the two grid engines.

TextTest will also install signal handlers

for these three signals to the SUT process, such that they will be ignored

unless the SUT decides otherwise. This is mostly to prevent unnecessary core

files from SIGXCPU and to allow TextTest to receive the signals itself and terminate

the SUT when it's ready to.

To configure SGE to play nicely with this, it's useful to set

a notify period of about 60 seconds when jobs are killed

(TextTest submits all jobs with the -notify flag). By default,

SIGUSR1 is also used to be a suspension notification in SGE,

which TextTest does not expect or handle. It's therefore

important to disable the NOTIFY_SUSP parameter in SGE if you

aren't going to get tests spuriously failing with RUNLIMIT

whenever they would be suspended:

Both grid engines have functionality for testing systems

which are themselves parallel, setting aside several CPUs for

the same test. TextTest integrates with this functionality also.

This is basically done via the environment variable

QUEUE_SYSTEM_PROCESSES, which says how many CPUs will be needed

for each test under that point in the test suite. In LSF this

basically translates to the “-n” option to “bsub”.

In SGE, you need to use an SGE parallel environment (read the

SGE docs!), this is specified via the config file entry

“parallel environment name”,

The performance machine functionality described above still

works here. In this case TextTest will ask the queue system for

all machines that have been used, and only if they are all

performance machines will performance be compared.

You can provide additional arguments on the command line to the grid engine submission program ("qsub" in SGE or "bsub" in LSF) by specifying the variable "QUEUE_SYSTEM_SUBMIT_ARGS" in your environment file.

You can configure the TextTest program that is run by the slave process via the environment variable "TEXTTEST_SLAVE_CMD", which defaults to just running "texttest.py". The main point of this is if you need a startup script to find the right version of Python on the remote machine, for example, or if you want to plug in developer tools like profilers and coverage analysers.

|